Anthropic Shifts Claude AI to Opt-Out Data Training—Why It Matters

Anthropic has been largely successful in branding its Claude AI tools as the more responsible alternative option to some of its competitors. A big part of this has been a perception of deep respect for responsible principles, trustworthiness, and the protection of user data being built in from the ground up.

But perhaps no more?

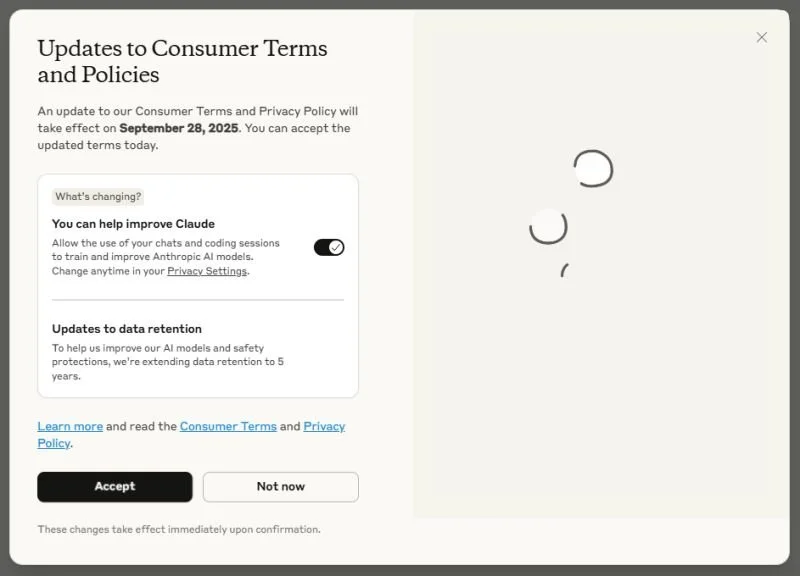

I was surprised to see this pop-up when accessing Claude this morning, and particularly the selected options that opt-in by default to using my data for model training unless I take into account what this is asking and turn it off. I worried I might have had the wrong setting selected all along. But on further digging, it appears that the main thrust of Anthropic's change of terms is to move model-training with your data from opt-in to opt-out and to extend their retention to 5 years.

This is not cool. This is a step backwards for user trust. And while it's great that they have chosen to force a user choice so prominently, this still feels like a dark pattern design that cosplays enrolling the user while knowing that most will just click 'accept' without thinking.

Claude is a very good model, and I'll certainly continue to use it. But it will be hard to just talk of Anthropic as the AI company that is most concerned about the impact of this technology on users.

From a broader perspective this highlights the risks of Shadow AI in business. Do you want Anthropic controlling data about your business for the next 5 years? You might be only one user miss-click from that happening, and even if they select the right option here, what about ChatGPT, or DeepSeek? For businesses, the right solution is an enterprise grade AI tool like Microsoft 365 Copilot, where you own the data. And luckily, if you have Microsoft 365, you already have one: Microsoft 365 Copilot Chat.

If you're a Claude user, when you see this pop-up, take care to ensure you select the right option.

Posted on Linkedin on 08/31/2025 -> View Linkedin post here