ChatGPT Health: Smart Evolution or a Risky Step Into Sensitive Territory

Someone asked me my take on the ChatGPT Health announcement.

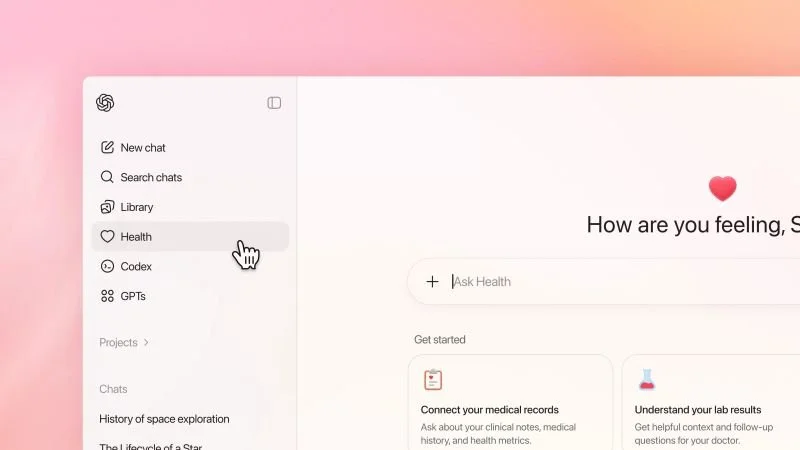

OpenAI has taken a step into a territory other tech vendors, especially Apple, have before in developing a solution for health information. This responds to the fact that many people already use ChatGPT (and other AI chatbots) for health queries, even though these generic tools have no specific contextual knowledge or protections to ensure the accuracy or safety of these interactions.

What have they done right?

➡️ ChatGPT Health is a separate service, with the announcement featuring mention of a separate (presumably fine-tuned) model that has been developed with relevant subject matter experts and has been benchmarked against different standards than OpenAI's primary ChatGPT models.

➡️ Data related to ChatGPT Health is segmented from ChatGPT, it has a separate history, memories etc, and can leverage external data such as imports from Apple Health. This segmentation is potentially not just important for privacy, but also to ensure that health-related memories do not bias ChatGPT outputs that are not related to health.

➡️ Taking at face-value that a lot of people are already using ChatGPT for health information, it is sensible and prudent of OpenAI to develop a service that puts in place common sense protections around those interactions and data. It is not overly generous to position this as OpenAI seeking to fix a problem many users might be creating for themselves unknowingly.

What is potentially problematic?

➡️ Health data is segmented from other information and encrypted, but simply ensuring data is encrypted everywhere it goes does not offer the same end-to-end encrypted protections that a product like Apple Health does when syncing to the cloud. This means that this data is accessible to OpenAI, and while they may guarantee not to use it for model training, other risks, such as litigation that requires release of chat logs could still be relevant.

➡️ A tool based on OpenAI's models will always have a greater than zero potential to hallucinate an answer. This is a foundational LLM problem that is unresolved for all uses, which is why human-in-the-loop oversight is still so essential for AI tools. However, by the nature of this capability, it is designed to offer insights to users who are unqualified to supervise the outputs and may assume greater accuracy because of the branding.

➡️ There is a tension between marketing a product intended to inform health decisions, and standard terms of use that essentially assign all liability to the user. While OpenAI states this is not for treatment or diagnosis, we know users follow the marketing, not the small print.

If I seem on the fence, that's because I am. OpenAI and others must both innovate AND follow the trends of use. But this is a risky one for a company and industry that seems addicted to just taking on more risk.

First Posted on Linkedin on 01/11/2026 -> View Linkedin Post Here