Microsoft Reverses Course on Copilot AI Disclaimers—A Win for Responsible AI

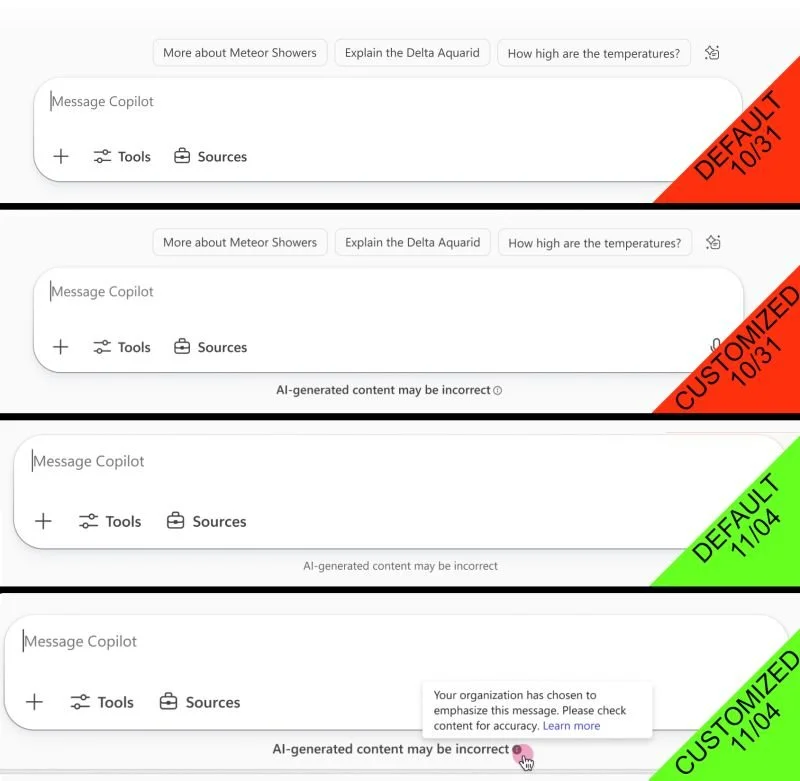

In a course correction, Microsoft has clarified via the Message center (MC1181766) that they do not intend to turn off AI disclaimers in Microsoft 365 Copilot by default as had been communicated only a few days ago.

Previously citing "distraction" for users as a reason for eliminating these warnings, the changed message now states that the disclaimer "will always be visible" and that customization options are aimed at "improving user awareness and flexibility". What a difference five days has made to the company's position on this issue.

This is great news but potentially highlights cultural friction within Microsoft about the value of Responsible AI messaging versus distraction-free interfaces that maximize use while paying less attention to risk.

Kudos to those who decided to make this change, prioritizing responsible use. But, frankly, the face saving "We have updated this message to clarify..." language when in fact the update is a 180-degree turn misses the opportunity to demonstrate that we are all learning together on these issues. Everyone, from individual users to the largest AI providers are susceptible to striking the wrong balance on utility versus safety with the AI tools we have available, and the most important bulwark in mitigating this risk is an open and honest conversation with all impacted stakeholders.

And, reflecting on my last post on this issue, I'd still be really interested to see if any research highlights whether these messages are at all effective. Signage sometimes genuinely is bad because it distracts, but it is the impact rather than the distraction we should be following.

First posted on Linkedin on 11/06/2025 -> View Linkedin post here