As AI Warning Labels Disappear, Who’s Teaching Us to Drive?

A "first draft" that might be "usefully wrong". These were among many small caveats or carve outs that were conspicuous in the restrained way AI's capabilities were described when we first heard about Copilot a couple of years back.

Am I only the one who is worried they are increasingly conspicuous in their absence?

Don't get me wrong. I am pro-AI, I use various AI tools every single day, and I'm a strong advocate for the value this technology can bring to our work and personal lives. But just as I believe in the value of driving AND in the importance of driver education that teaches safe practices, I see the utility of AI as being necessarily framed through learning how to use it safely.

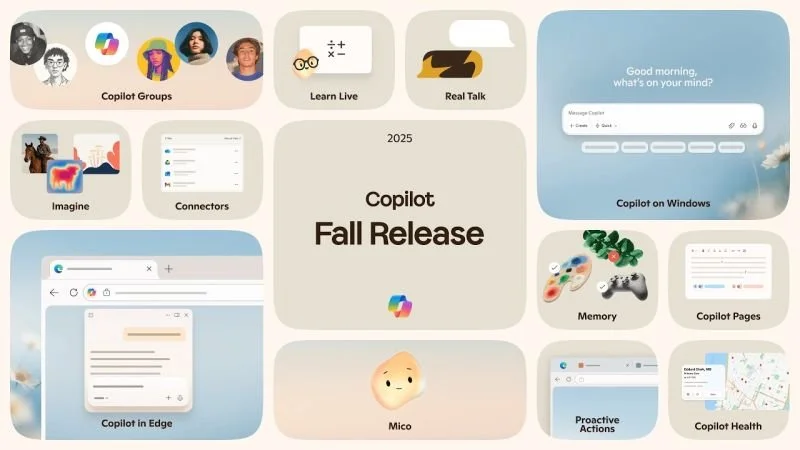

In this week's Copilot Sessions announcements, it was delightful to see Mustafa Suleyman bringing AI to unusual and underserved audiences. Focusing on how AI can help individuals across their lives rather than just business outcomes is refreshing. But did Annie learn why her seemingly all-knowing AI helper might give her poor advice? Or does Felix understand that in identifying mechanical fixes for esoteric sewing machines, Copilot might pull up the wrong information? I fully agree there is value in this technology for everyone, but there is also risk that is becoming more apparent day-to-day as stories of the harms blindly following AI can do to peoples' lives bubble to the surface.

This is an incredibly difficult needle to thread. Trying to sell a shiny new product while running a public safety messaging campaign about its technology is a big ask even for those AI providers at the most responsible end of the spectrum like Microsoft. But, ultimately, if the benefits of AI technology are to be distributed equitably in our society, it's vital that non-traditional users like Annie or Felix understand those risks just as well as Mustafa does.

What's the solution? Well, we definitely don't have driver education and licenses because it made it easier for Ford to sell cars. The public safety provisions for drivers only came after governments noticed how harmful this rapidly spreading technology could be.

Am I advocating for mandating AI drivers licenses? No. But as the AI providers' warning labels get smaller or vanish altogether, something needs to fill the void if users are to be protected from realizing the harms instead of the benefits.

For my part, I remain committed to making safety and responsible use considerations are a core part of my consulting work on AI adoption for my clients. And for individual users, even Annie or Felix, my Responsible AI for Business Users online course offers a solid introduction on how to stay safe while getting the best from AI technology (see the link in the comments).

How are you navigating this new less responsibility focused push for AI everywhere? Are you seeing lots of new risks, or just new opportunities.

First posted on Linkedin on 10/26/2025 -> View Linkedin post here