Microsoft Tightens App Permissions After ChatGPT Sparks Data Security Concerns

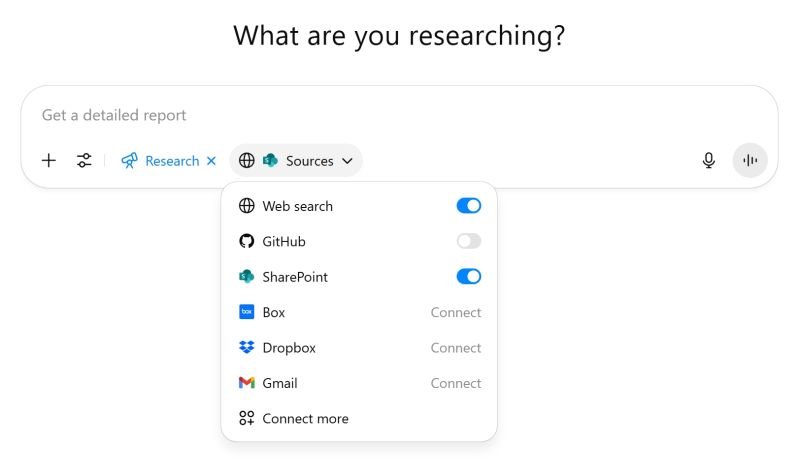

When OpenAI released the capability for ChatGPT's Deep Research to connect directly to Microsoft 365 data with simple end-user consent, many IT departments suddenly took notice of a data exposure risk that had lived in Microsoft 365's default settings for a very long time.

By default, any user could share any file they have access to with a service like ChatGPT as easily as they could share their free/busy calendar information with a service like Calendly. The underlying app connectivity technology was exactly the same and wasn't built with the risks of AI services in mind.

Now with MC1097272 in the Microsoft 365 message center, it seems that Microsoft has taken note of this landscape change too. Among a bunch of updates titled "Microsoft 365 Upcoming Secure by Default Settings Changes" is an update to lock down third-party apps requiring files or sites access (OneDrive or SharePoint) to get admin consent.

Organizations will want to check their app approval settings and ensure a process to give admin consent is in place. These changes will start rolling out in July and be complete by mid-August.

❓ What do you think? Are you pleased that Microsoft is updating this default setting? And does it go far enough to address the increased risks of data sharing in the AI age?